Image unshredding: Part 1 - shredding images

On the orange site of infinite distractions, I saw this great post about reconstructing images that had been shredded.

I like these sorts of challenges. Like mapping and ray tracing, this is a visually engaging activity. Though I usually do enjoy getting backend stuff to work, it’s just a little nicer to do stuff with graphics that provide a bit of visual feedback.

There’s a few ways to do this so I’m just going to try and see where I get to.

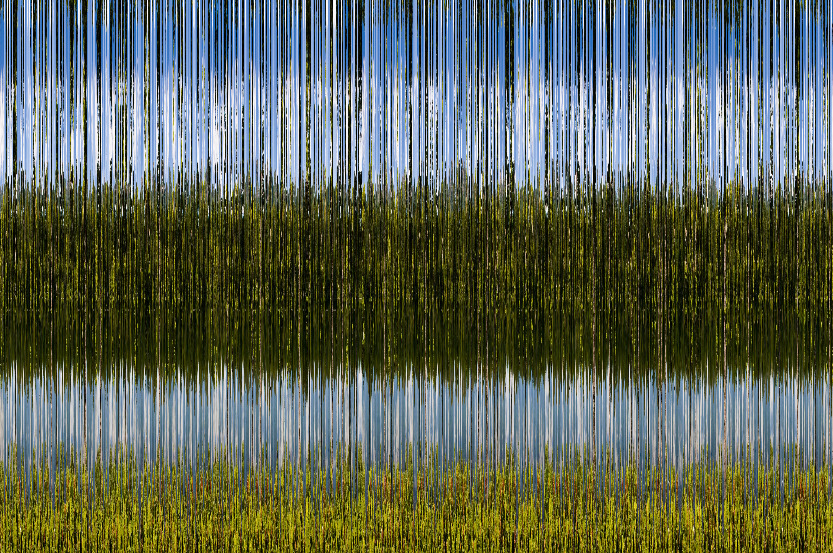

The image I’ll be destroying, and then trying to put back together

Once Shredded

How I did the above.

The basic process is

- Read the image into a buffer.

- Get the image width.

- Fill a slice with that range of numbers.

- Randomly shuffle them.

- Write the image out in the shuffled order.

And all the king’s horses and all the king’s men couldn’t put that image back together again. Damn…

So for my first crack at this, I simply tried to create a score for each column in the image. And then select the column in the 0th position in the shredded image and try and find the next column with the least amount of difference in its score.

I tried a few different permutations of adding, multiplying or subtracting, or modding the RGBA channels to create one single number per column. I hoped that these calculations would pronounce the difference between each column enough that they’d be easy to sort into similar groups.

This didn’t work so well. But I got some pretty good results as a start.

This approach is what I’d describe as gross scoring. You can kind of see the tree and make out parts of the lake.

In this image, I was scoring with just the green and blue channels.

And with this one, I subtracted the green channel from red and modulo-ed that result via the blue channel.

This was a pretty good learning experience. As a start.

The idea is pretty solid I feel. I will continue with this approach with a lot of refinements.

I think the big takeaway was that using a score from one whole column is not going to work. But I don’t want to have to compare all the pixels in a column to all the other pixels to find a match. I think I can probably cut the image in half or quarters and use the same scoring approach to find closeness.

On reflection, I think this approach is pretty sensible. When you study photography composition, there are a few rules around balance and the like. The rule of thirds jumps out from memory. So I expect that in most photos the central third will contain similar gradients and the same for the top and bottom.

Anyway, I’ll move forward with that assumption and build the next iteration of the unshreader that looks at a band of pixels. develops a score for them and then finds their nearest neighbor.

I expect this to be a pretty successful approach. Though, I could be very wrong.

What I know will be an issue is that the image will start and stop in weird chunks. But I have an idea about that too. Something along the lines of looking for the greatest difference between two columns and using that as a signal that there’s a break in the image at that location.

But that’s firmly a future problem to solve.